|

| The Golem, by Philippe Semeria |

(Overview and Framework)

This is the first of two posts on Muehlhauser and Helm’s article “The Singularity and Machine Ethics”. It is part on my ongoing, but completely spontaneous and unplanned, series on the technological singularity. Before reading this, I would suggest reading my earlier attempts to provide some overarching guidance on how to research this topic (link is above). Much of what I say here is influenced by my desire to “fit” certain arguments within that framework. That might lead to some distortion of the material I’m discussing (though hopefully not), but if you understand the framework you can at least appreciate what I’m trying to do (even if you don’t agree with it).

Assuming you’ve done that (and not really bothered if you haven’t), I shall proceed to the main topic. Muehlhauser and Helm’s article (hereinafter MH), which appears in the edited collection Singularity Hypotheses (Springer, 2013), tries to do several things, some which will be covered in this series and some of which will not. Consequently, it behooves me to give a brief description of its contents, highlighting the parts that interest me and excluding the others.

The first thing it does is that it presents a version of what I’m calling the “Doomsday Argument” or the argument for the AI-pocalypse. It does so through the medium of a clever thought experiment — the Golem Genie thought experiment — which is where I get the title to this blog post from. I’ll be discussing this thought experiment and the attendant argument later in this post. Second, it critiques a simple-minded response to the Doomsday Argument, one that suggests the AI-pocalypse is avoidable if we merely program AI to “want what we want”. MH argue this is unlikely to succeed because “human values are complex and difficult to specify” (MH, p. 1-2). I definitely want to talk about this argument over the next two posts. Third, and I’m not quite sure where to place this in the overall context of the article, MH present a variety of results from human psychology and neuroscience that supposedly bolster their conclusion that human values are complex and difficult to specify. While this may well be true, I’m not sure whether this information in any improves their critique of the simple-minded response. I’ll try to explain my reason for thinking this in part two. And finally, MH try to argue that ideal preference theories of ethics might be promising way to avoid the AI-pocalypse. Although I’m quite sympathetic to ideal preference theories, I won’t be talking about this part of the article at all.

So, in other words, over the next two posts, I’ll be discussing MH’s Golem Genie thought experiment, and the argument for the AI-pocalypse that goes with that thought experiment. And I’ll also be discussing their critique of the simple-minded response to that argument. With that in mind, the agenda for the remainder of this post is as follows. First, I’ll outline the thought experiment and the Doomsday Argument. Second, I’ll discuss the simple-minded response, and given what I think is the fairest interpretation of that response, something that MH aren’t particularly clear about. In part two, I’ll discuss their critique of that response.

My primary goal here is to clarify the kinds of arguments being offered in favour of the Unfriendliness Thesis (part of the doomsday argument). As a result, the majority of this series will be analytical and interpretive in nature. While I think this is valuable in and of itself, it does mean that I tend to eschew direct criticism of the arguments being offered by MH, (though I do occasionally critique and reconstruct what they say where I think this improves the argument).

1. The Golem Genie and the Doomsday Argument

One of the nice things about MH’s article is the thought experiment they use to highlight the supposed dangers of superintelligent machines. The thought experiment appeals to the concept of the “golem”. For those who don’t know, a golem is a creature from Jewish folklore. It is an animate being, created from inanimate matter, which takes a humanoid form. The golem is typically brought to life to serve some human purpose, but carrying out this purpose often leads disastrous unintended consequences. The parallels with other forms of human technology are obvious.

Anyway, MH ask us to imagine the following scenario involving a “Golem Genie” (effectively a superpowerful Golem):

The Golem Genie: Imagine a superpowerful golem genie materialises in front of you one day. It tells you that in 50 years time it will return to this place, and ask you to supply it with a set of moral principles. It will then follow those principles consistently and rigidly throughout the universe. If the principles are faulty, and have undesirable consequences when followed consistently by a superpowerful being, then disaster could ensue. So it is up to you to ensure that the principles are not faulty. Adding to your anxiety is the fact that, if you don’t supply it with a set of moral principles, it will follow whichever moral principles somebody else happens to articulate to it.

The thought experiment is clever in a number of respects. First, and most obviously, it is clever in how it parallels the situation that faces us with respect to superintelligent AI. The notion is that machine superintelligence isn’t too far off (maybe only 50 years away), and once it arrives we’d want to make sure that it follows a set of goals that are relatively benign (if not better than that). The Golem Genie replicates the sense of urgency that those who worry about the technological singularity think we ought to feel. Blended into this, and adding to the sense of urgency, is the notion that if we (i.e. you and I, the concerned citizens reading this thought experiment) aren’t going to supply the genie with its moral principles, somebody else is going to do so, and we have to think seriously about how much faith we would put in these unknown others. Again, this is clever because it replicates the situation with respect to AI research and development (at least as AI-pocalyptarians see it): if we don’t make sure that the superintelligent AI has benign goals, then we leave it up some some naive and/or malicious AI developer (backed by a nefarious military-industrial complex) to supply the goals. Would we trust them? Finally, in addition to encouraging some healthy distrust of others, the thought experiment gets us to question ourselves too: how confident do we really feel about any of our own moral principles? Do we think it would be a good idea to implement them rigidly and consistently everywhere in the universe?

So, I like the thought experiment. But by itself it is not enough. Thought experiments can be misleading, their intuitive appeal can incline us toward sloppy and incomplete reasoning. We need to try to extract the logic and draw out the implications of the thought experiment (as they pertain to AI, not golem genies). That way we can develop an argument for the conclusion that superintelligent AI is something to worry about. Once we have that argument in place, we can try to evaluate it and see whether it really is something to worry about.

Here’s my attempt to develop such an argument. I call it the “Doomsday Argument” or the argument for the AI-pocalypse. It builds on some of the ideas in the thought experiment, but I don’t think it radically distorts the thinking behind it. (Note: the term “AI+” is used here to denote machine superintelligence).

- (1) If there is an entity that is vastly more powerful than us, and if that entity has goals or values that contradict or undermine our own, then doom (for us) is likely to follow.

- (2) Any AI+ that we create is likely to be vastly more powerful than us.

- (3) Any AI+ that we create is likely to have goals and values that contradict or undermine our own.

- (4) Therefore, if there is AI+, doom (for us) is likely to follow.

A few comments about this argument are in order. First, I acknowledge that the use of the word “doom” might seem somewhat facetious. This is not because I desire to downplay the potential risks associated with AI+ (I’m not sure about those yet), but because I think the argument is apt to be more memorable when phrased in this way. Second, I take it that premise (1) of the argument is relatively uncontroversial. The claim is simply that any entity with goals antithetical to our own, which also has a decisive power advantage over us (i.e. is significantly faster, more efficient, and more able to achieve its goals), is going to quash, suppress and possibly destroy us. This is what our “doom” amounts to.

That leaves premises (2) and (3) in need of further articulation. One thing to note about those premises is that they assume a split between the goal architecture and the implementation architecture of AI+. In other words, they assume that the engineering (or coding) of machines goals is separable from the creation of its “actuators”. One could perhaps question that separability (at least in practice). Further, premise (2) is controversial. There are some who think it might be possible to create superintelligent AI that is effectively confined to a “box” (Oracle AI or Tool AI), unable to implement or change the world to match its goals. If this is right, it need not be the case that any AI+ we create is likely to be vastly more powerful than us. I think there are many interesting arguments to explore on this issue, but I won’t get into them in this particular series.

So that leaves premise (3) as the last premise standing. For those who read my earlier posts on the framework for researching the singularity, this premise will look like an old friend. That’s because it is effectively stating the Unfriendliness Thesis (in its strategic form). Given that this thesis featured so prominently in my framework for research, it will come as no surprise to learn that the remainder of the series will be dedicated to the addressing the arguments for and against this premise, as presented in MH’s article.

As we shall see, MH are themselves supporters of the Unfriendliness Thesis (though they think it might be avoidable), so it’s their defence of that thesis which really interests me. But they happen defend the thesis by critiquing one response to it. So before I can look at their argument, I need to consider that response. The final section of this post is dedicated to that task.

3. The Naive Response: Program AI+ to “Want what we want”

As MH see it, the naive response to the Doomsday Argument would be claim that doom is avoidable if we simply programme the AI+ to want what we want, i.e. to share our goals. By default, what we want would not be “unfriendly” to us, and so if AI+ follows those goals, we won’t have too much to worry about. QED, right?

Let’s spell this out more explicitly:

- (5) We could (easily or without too much difficulty) programme AI+ to share our goals and values.

- (6) If AI+ shared our goals and values, then (by default) it wouldn’t undermine our goals and values.

- (7) Therefore, premise (3) of the doomsday argument is false (or, at least, the outcome it points to is avoidable).

Now, this isn’t the cleanest logical reconstruction of the naive response, but I’m working with a somewhat limited source. MH never present the objection in these explicit terms — they only talk about the possibility of programming AI to “want what we want” and the problems posed by human values that are “complex and difficult to specify” — but then again they never present the Doomsday Argument in explicit terms either. Since my goal is to render these arguments more perspicuous I’m interpolating many things into the text.

But even so, this reconstruction seems pretty implausible. For one thing, it is hopelessly ambiguous with respect to a number of key variables. The main one being the intended reference of “our” in the premises (or “we” in the version of the response that MH present). Does this refer to all actually existent human beings? If so, then the argument is likely to mislead more than it enlightens. While premise (6) might be strictly true under that interpretation, its truth is trivial and ignores the main issue. Why so? Well, because many human beings have values and goals that are antithetical to those of other human beings. Indeed, human beings can have extremely violent and self-destructive goals and values. So programming an AI+ to share the values of “all” human beings (whatever that might mean) isn’t necessarily going to help us avoid “doom”.

So the argument can’t be referring to the values of all human beings. Nor, for similar reasons, can it be referring to some randomly-chosen subset of the actually existent human population, since they too might have values that are violent and self-destructive. That leaves the possibility that it refers to some idealised, elite subset of the population. This is a more promising notion, but even then there are problems. The main one is that MH use their critique as a springboard for promoting ideal preference theories of ethics, so the response can’t be reformulated in a way that seems to endorse such views. If it did, then MH’s critique would seem pointless.

In the end, I suspect the most plausible reconstruction of the argument is one that replaces “share our goals and values” with something like “have the (best available)* moral goals and values or follow (the best available) moral rules”. This would avoid the problem of making the argument unnecessarily beholden to the peculiar beliefs of particular persons, and it wouldn’t preempt the desirability of the ideal preference theories either (ideal preference theories are just a portion of the available theories of moral values and rules). Furthermore, reformulating the argument in this way would retain some of the naivete that MH are inclined to criticise.

The reformulated version of the argument might look like this:

- (5*) We could (easily or without too much difficulty) programme AI+ to have (the best available) moral goals and values, or follow (the best available) moral rules.

- (6*) If AI+ had (the best available) moral goals and values, or followed (the best available) moral rules, it would not be “unfriendly”.

- (7*) Therefore, the unfriendliness thesis is false (or at least the outcome it points to is avoidable).

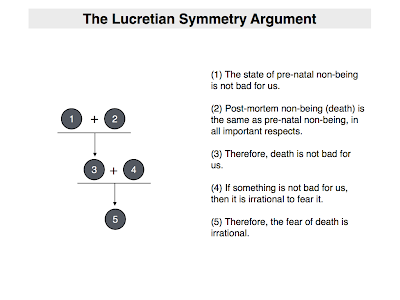

This is still messy, but I think it is an improvement. Note, however, that this reformulation would force some changes to the original Doomsday Argument. Those changes are required by the shift in focus from “our” goals and values to “moral” goals and values. As I said in my original posts, about this topic, that is probably the better formulation anyway. I have reformulated the Doomsday Argument in the diagram below, and incorporated in the naive response (strictly speaking, (6*) and (7*) are unnecessary in the following diagram but I have left them in to retain some continuity with the text of this post).

That leaves the question of where MH’s critique fits into all this. As I mentioned earlier, their critique is officially phrased as “human values are complex and difficult to specify”. This could easily be interpreted as a direct response to the original version of the naive response (specifically a response to premise 5), but it would be difficult to interpret it as a direct response to the revised version. Still, as I hope to show, they can be plausibly interpreted as rejecting both premises of the revised version. This is because they suggest that there are basically two ways to get an AI to have moral goals and values, or to follow moral rules: (i) the top-down approach, where the programmer supplies the values or rules as part of the original code; or (ii) the bottom-up approach, where a basic learning machine is designed and is then encouraged to learn moral values and rules on a case-by-case basis. In critiquing the top-down approach, MH call into question premise (6*). And in critiquing the bottom-up approach, they call into question premise (5*). I will show how both of these critiques work in part two.